The overarching motivation of my research is to advance the frontiers of trustworthy artificial intelligence (AI). My current research focus is on the fundamental limits and practical methods for federated and explainable machine learning. Several prestigious grants support these efforts:

-

A Mathematical Theory of Trustworthy Federated Learning (MATHFUL)

Funded by the Research Council of Finland (Decision #363624)

More Info -

A Mathematical Theory of Federated Learning (TRUST-FELT)

Funded by the Jane and Aatos Erkko Foundation (Decision #A835)

More Info -

Forward-Looking AI Governance in Banking & Insurance (FLAIG)

Supported by Business Finland

Federated Learning: Computational and Statistical Innovations

Federated Learning for Network-Structured Data

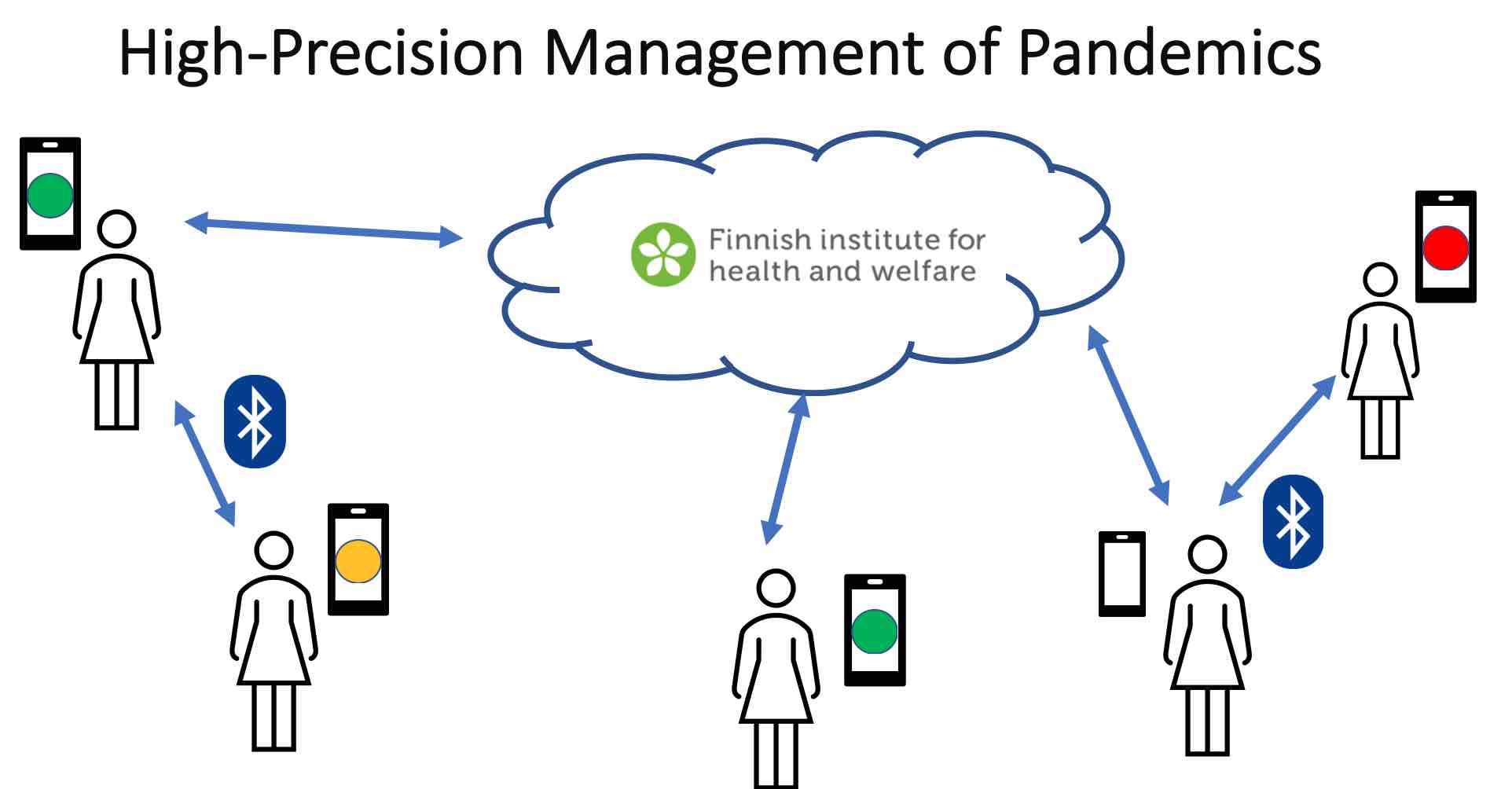

The era of big data over networks demands solutions for handling interconnected, heterogeneous datasets. A prime example is pandemic management, where wearables and smartphones generate local datasets tied by physical, social, or biological network structures. These datasets often vary in statistical properties but exhibit intrinsic relationships.

My research introduces networked exponential families, a cutting-edge probabilistic model that addresses these challenges. The model enables:

- Adaptive Data Pooling: Groups similar datasets to build personalized predictions.

- Privacy-Preserving Techniques: Ensures data confidentiality in federated settings.

- Scalable Optimization: Employs robust methods to handle imperfections in data and computation.

Featured Publications

-

“Clustered Federated Learning via Generalized Total Variation Minimization”

Y. SarcheshmehPour, Y. Tian, L. Zhang, A. Jung, IEEE Transactions on Signal Processing, 2023.

Read More (DOI: 10.1109/TSP.2023.3322848) -

“On the Duality Between Network Flows and Network Lasso”

A. Jung, IEEE Signal Processing Letters, 2020.

Read More (DOI: 10.1109/LSP.2020.2998400) -

“Networked Exponential Families for Big Data Over Networks”

A. Jung, IEEE Access, 2020.

Read More (DOI: 10.1109/ACCESS.2020.3033817) -

“Semi-Supervised Learning in Network-Structured Data via Total Variation Minimization”

A. Jung et al., IEEE Transactions on Signal Processing, 2019.

Read More (DOI: 10.1109/TSP.2019.2953593) -

“Localized Linear Regression in Networked Data”

A. Jung and N. Tran, IEEE Signal Processing Letters, 2019.

Read More (DOI: 10.1109/LSP.2019.2918933)

Explainable AI (XAI): Personalized and Transparent Models

Building Trust Through Personalized Explainability

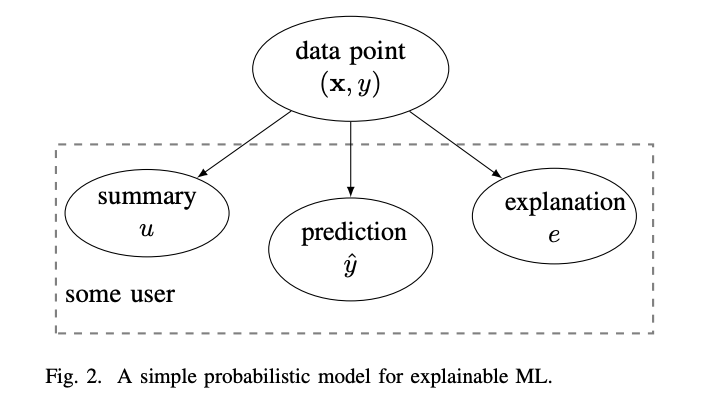

For AI systems to gain widespread acceptance, their predictions must be explainable. My research focuses on developing personalized explanations tailored to individual users. By measuring the reduction in conditional entropy, this method ensures that explanations align with the user’s knowledge and needs.

Key Features

- User-Centric Explanations: Accounts for user background and preferences.

- Model-Agnostic Framework: Compatible with any machine learning model.

- Efficient Implementation: Requires minimal training data—just data points, predictions, and user-provided summaries.

Featured Publications

-

“Explainable Empirical Risk Minimization”

L. Zhang, G. Karakasidis, A. Odnoblyudova, et al., Neural Computing and Applications, 2024.

Read More -

“An Information-Theoretic Approach to Personalized Explainable Machine Learning”

A. Jung and P. H. J. Nardelli, IEEE Signal Processing Letters, 2020.

Read More (DOI: 10.1109/LSP.2020.2993176)

Keywords

Federated Learning, Explainable AI, Big Data Over Networks, Networked Exponential Families, Personalized Predictions, Total Variation Minimization, Machine Learning Explainability, Trustworthy AI